For the last two years, I've had the privilege of working in a scientific R&D lab that's pushing the frontier of electrochemistry. At the same time, I've witnessed AI systems acquire capabilities at breakneck speed. The resulting productivity gains have so far been primarily limited to the virtual world. While I expect this alone to eventually compound into significant increases in total factor productivity, putting these systems to use in the physical world is going to yield by far the largest dividends.

Where solving climate change and disease is not constrained by will and funding, it is constrained by advances in the natural sciences: electrochemistry, synthetic biology, precision medicine. Apart from the obvious moral imperative to make progress, each of these domains has the potential to unlock trillions of dollars worth of value.

Fundamentally, there is no reason why the mathematics that let AI systems produce good code wouldn't allow them to produce good science. They're transforming programming first because the training and reinforcement learning environments are high signal-to-noise. If it compiles and the tests pass, tug on the weights and repeat.

In the natural sciences, things are more challenging. A few months ago, a chemist colleague joked that he was jealous of my work because I immediately see the outcome of my experiments. For him, an experiment takes orders of magnitude longer, the data may be messy and the results inconclusive. Under these constraints, constructing an effective learning environment for AI systems is much more difficult.

However, in select areas of the natural sciences and with the right tools, it is possible today. Using such environments, we can train AI systems and begin to automate parts of R&D now, with interfaces to the physical world that are currently available and without being dependent on general robotics. In this post, I'm going to lay out a practical roadmap towards this goal.

Interfacing with the Physical World

From a bird's-eye view, the R&D loop looks like this:

- Review data and generate a new experiment idea

- Set up the experiment

- Run the experiment and collect data

- Process and analyse the data

The AI systems we have today, which we can roughly characterise as LLMs plus research and data analysis harnesses (OpenAI's Deep Research, FutureHouse's Robin, etc.), can autonomously complete steps 1 and 4. To close the loop, we need systems that can work on steps 2 and 3. This requires an interface to the physical world that lets AI systems design, monitor and control experimental setups.

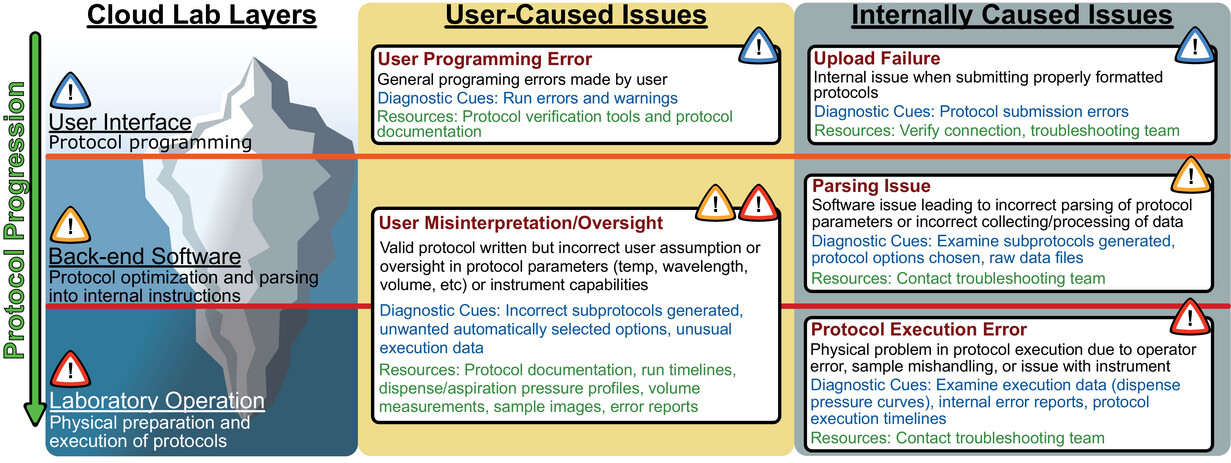

Until we solve general robotics, this completely rules out some areas of the sciences. For instance, I can't think of any way for an AI system to carry out research where blood needs to be drawn from participants. But plenty of experiments in other areas are composed of steps that deal with less real-world complexity. At the extreme end, cloud labs allow researchers (and by extension, AI systems) to programmatically define and carry out a limited set of common experimental steps, such as liquid handling, centrifugation and microscopy.

This is very useful in some contexts. Researchers without access to certain equipment can outsource the relevant experimental steps. And for those experiments that are entirely composed of steps that cloud labs support, high-throughput experimentation is made accessible. This applies, for example, to areas of research in the life sciences such as genomics, where methodologies like incubation and sequencing are standard. But I suspect cloud labs will generally play a limited role in frontier AI-driven experimentation, even though they are already technically capable of letting AI systems run experiments at scale.

First, scientific experimentation at the frontier often involves novel ways of measurement. If you're designing a catalyst with unusual structural properties, for instance, you may have to find creative ways of measuring those properties in the context of your chemical process. But cloud labs are incentivised to only invest in standard measurement devices and methods, because they need to amortise their costs across the customers that are going to use them. This significantly reduces the fraction of experimental steps in frontier research they can carry out.

Second, assembling experimental setups for novel research tends to be complex. By definition, technologies with a low technological readiness level (TRL) have fragmented supply chains. A new bioreactor assembly, for example, may consist of a handful of bespoke components fabricated in-house by a corporate research lab, as well as hundreds of components from tens of suppliers. There is no easy way for a cloud lab to adopt such supply chains from their customers, but this would be necessary to conduct high-throughput testing of different variations of the bioreactor assembly, for example.

Finally, research labs at the frontier are likely to have accumulated an unpublished body of knowledge that is required to come up with good new experiments and analyse them (or, in short, "research taste"). To productively outsource part of their research process to cloud labs, they have to be willing to share this body of knowledge. This, in particular, is a big sticking point for corporate labs with IP to protect. Initially, Gingko Bioworks, a cloud lab and/or consultancy that allows customers in biotech to outsource high-throughput experimentation, negotiated that it would own the IP that resulted from the research (if you're not on Chrome, search the page for "intellectual property transaction structure"). This model was too unappealing for prospective customers and was retired in 2024 (see slide 22 onwards). Even having to share the IP necessary for Gingko to carry out experimentation often dissuades prospective customers.

Scientific Discovery at the Press of a Button, a paper by researchers at Carnegie Mellon University, is an overview of the promise and shortcomings of cloud labs. Notably, Carnegie Mellon University uses cloud labs extensively for parts of their life sciences research and contracted Emerald Cloud Labs, one of the biggest cloud lab operators in the US, to build an entire facility for them. We can expect this paper to reflect their reality accurately, and it indeed describes the aforementioned limitations, amongst others.

As a result, I don't believe independent cloud labs will scale as an interface to the physical world that lets AI systems work on steps 2 and 3 of the R&D loop. To work for frontier research in the natural sciences, the necessary interface needs to sit in the research labs, where it can be used as part of existing processes and supply chains. Concretely, what would be the requirements for such an interface?

Technical and Operational Requirements

On the technical side, it needs to be able to describe how sensors, instruments, motors and a wide variety of other field devices should act during an experiment. For instance, an experimental setup might require that when a sensor reaches a threshold temperature, a valve is closed. A first intuition might be to express these relationships in code, using a library that abstracts away the communication with field devices. After all, this is within the technical capabilities of currently available LLMs. But if we imagine a research lab with many AI systems configuring experimental setups in parallel, this approach presents two problems.

First, for safety reasons, you would need to be able to enforce that the AI systems cannot configure dangerous experimental setups, perhaps involving a threshold temperature that is too high or control behaviour that leads to feedback loops. Formal verification is the ideal tool to guarantee this, but formally verifying programs, especially imperative programs that are constantly being modified (as new experimental setups are configured), is difficult. This means programmers would need to review the code for each experimental setup and sign off on it, a process that is both error-prone and becomes a bottleneck at scale. And second, in this vein, experimental setups should be easily editable by researchers without programming knowledge, ideally via intuitive visual representations. Although having a one-to-one mapping between code in general-purpose programming languages and visual representations is possible, these representations become less intuitive with program size and control flow complexity (especially involving threading, etc.). Thus, code written in a general-purpose programming language is not the ideal medium.

Another technical requirement: carrying out experiments, during step 3, should require as little AI system involvement as possible. If we imagine one agent attending to one "loop" from step 1 through 4, we don't want to waste tokens on the minutiae of step 3. As long as AI system performance is inversely correlated to context size, whether via the quadratic cost of self-attention or other constraints, we want the majority of our tokens produced by steps 1 and 4 because these are most relevant to making scientific progress.

Furthermore, the interface needs to be capable of ingesting huge volumes of data. The comparative advantage of AI scientists versus human scientists is that we can trivially clone AI scientists and run them in parallel. Dwarkesh Patel has a great essay on this topic. And parallelisation and scale produces more data needing to be dealt with. Equally as important is tagging the data. Physical experiments and the data they produce are messy, and part of the reason why creating high signal-to-noise learning environments over this data is difficult is because cleaning and labelling take time and effort. Ideally, setting up the experiment in step 2 defines a data schema which is used to automatically tag incoming data in step 3.

On the operational side, the interface should ideally scale from scientific R&D labs to the deployment environments where the research outcomes are refined to user needs and ultimately generate value, so that research along the entire commercialisation pipeline is workable by AI systems. As an example, let's assume progress has been made on a chemical process in a scientific R&D lab, via an AI system using such an interface. What determines whether this interface is also usable in deployment environments, such as industry and manufacturing?

In short: safety and auditability. In heavily regulated domains like industry and manufacturing, software and hardware platforms need to meet stringent requirements on networking, data lineage, correctness and cybersecurity. If we want a horizontally scalable interface, this constraint effectively shrinks our pool of candidates to the set of interfaces that are certified for industrial and manufacturing use.

Interfaces in Industry and Manufacturing

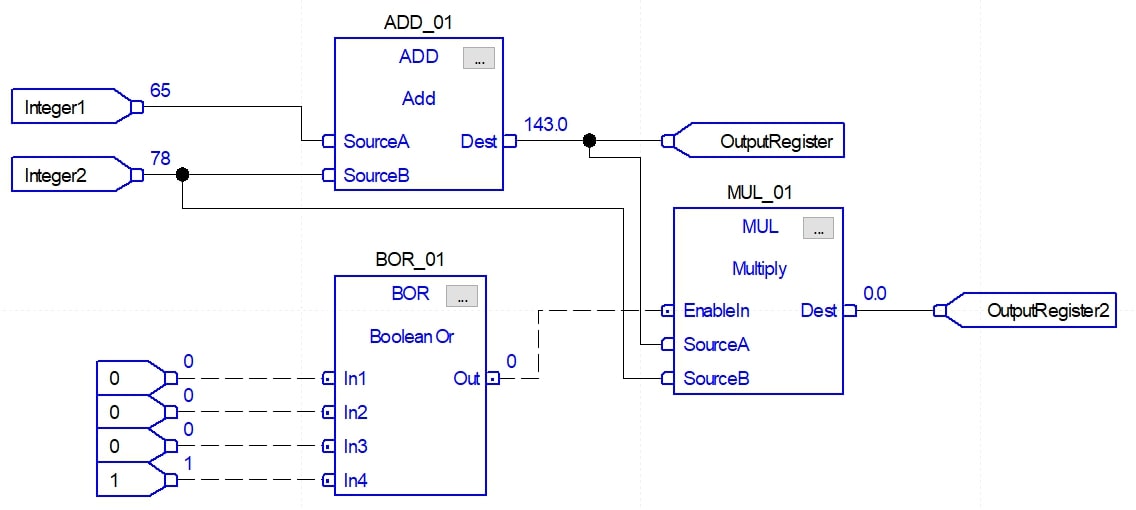

In general, software and hardware platforms that describe and control physical setups in industrial settings fall under the category of industrial control systems. They consist of three building blocks: field devices (sensors and actuators), I/O interfaces (racks that you can plug multiple field devices into) and programmable logic controllers (PLCs, industrial computers that orchestrate the I/O interfaces and field devices). PLCs are the brains of the system and need to be programmed with control logic. While there are many standards for doing so, the most relevant to our requirements are function block diagrams (FBDs), which are visual representations of the relationships and behaviours of field devices.

Once compiled and deployed to a PLC, each FBD is executed either at a fixed frequency, or in response to certain events (perhaps a sensor temperature exceeding a threshold). The register reads and writes are transmitted between the PLCs and the field devices via the I/O interfaces, over industrial protocols such as OPC/UA, Modbus or Profinet. This communication layer only needs to be configured once per field device being installed and is abstracted away from FBDs. Due to this flexibility, a wide variety of field sensors (relevant to natural sciences research, manufacturing and industry) work out of the box. And because PLCs allow configuring custom ways of getting data in and out of FBDs, interfacing with any device or service that exposes an API is theoretically possible.

During operation/experiments, the values of the registers, which are called points in industrial contexts, are continuously streamed to database systems called historians, tagged with their names, descriptions, types and relation to field sensors. Historians only store point values on change and can thus ingest huge amounts of data. Optionally, they can even statistically prune incoming data, for example by removing outliers.

The industrial hardware and software I'm describing used to be expensive and their use was accordingly limited to power generation, manufacturing, industry and the like. But in the last 20 years, these systems have come down the cost curve, primarily because open standards for industrial protocols and PLCs progressively lowered the barriers-to-entry for new hardware and software providers. A good example is Universal Automation, a PLC runtime (an environment which accepts FBDs, compiles and executes them) that can be run on PLCs from a range of manufacturers, who are all competing on cost.

In 2025, you can buy an entire industrial control system, including a few field devices, an I/O rack and a PLC for less than a thousand bucks. And because these systems are also flexible, using industrial control systems to set up and control experiments has been making inroads in corporate and academic R&D labs. The EPICS control system for IsoDAR describes how an MIT lab used an open-source collection of control system tools called EPICS to control and extract data from their proton accelerator. And in the life sciences, the Fraunhofer Center for Chemical-Biotechnological Processes control their photobioreactors for microalgae cultivation using PLCs. For small-scale experiments with few field devices, the OpenPLC framework is widely used in academia.

On a practical level, this shows that industrial control systems are used in sites ranging from corporate R&D, to academia, to industry and manufacturing. Thus, if we want the aforementioned steps 2 and 3 to be workable by AI systems across these sites, they meet our operational requirements as an interface to the physical world. Do they meet our technical requirements?

Safety and Data Requirements

Step 2 requires AI systems to be able to set up experiments. As I previously wrote, this puts some domains out of reach until we solve general robotics. But in other domains, the amount of human intervention needed to set up a new experiment is low.

Take microbial bioreactors, for instance. To set up a new experiment, a human needs to prepare the vessel and inoculate the broth. But everything beyond this: defining dilution or perfusion pump rates, PID set-points for temperature, pH and dissolved oxygen, stirrer speed, gas-mix flows, light intensity, and sampling pulses are configurable entirely by creating the necessary points/tags and wiring together the FBDs. This dynamic is similar in areas of chemistry where reactor systems are built and tested in parallel during R&D: the ratio of experimental setup time where (A) a human could be replaced by AI with access to the setup via an industrial control system, to (B) where a human is needed for physical tasks, is high.

Concretely, this means such AI systems can reduce the cost of parallelising experimentation by driving the time needed for (A) towards zero. Then, at a scale of hundreds of physical setups in parallel, the most immediate practical question becomes: if AI systems decide what experiments to run and how to run them, how can we guarantee that the physical setups don't enter unsafe states?

Modern PLC runtimes, like runtimes based on the IEC61499 standard, are event-driven, which means they execute FBDs once user-defined events are triggered, like a point reaching a certain value. Furthermore, FBDs are executed sequentially and can only change point values. This means we can model FBDs as pure functions (without side-effects) that take the set of point values as arguments and transform them. Because the whole control program then becomes a composition of deterministic state-transformers, safety properties translate to inductive invariants on an explicit state-transition system. This is exactly the form that modern model checkers and theorem provers handle automatically.

What this means in practice is that you could trivially run a series of formal verification steps on the experimental configurations that the AI systems produce before actually running them. There is no reason why this wouldn't work at scale because automated formal verification is computationally cheap. And dealing with unsafe states that unexpectedly arise during an experiment can be automatically handled by PLC runtimes by notifying human supervisors, given the appropriate alarms are configured.

On the requirements for data tagging - configuring the points needed for an experiment (with one point corresponding to each measurement being taken, such as the temperature of a thermocouple) automatically guarantees that incoming data, during step 3, is tagged appropriately. With no post-experiment data cleaning necessary (for example, by configuring historians to automatically prune outliers or data that was produced while an alarm was active), this gives us a high signal-to-noise stream of data points to use for training and reinforcement learning.

More generally, these datasets are a goldmine for allowing AI systems to develop an intuition for the relevant scientific domain. Taken together, the FBDs (describing how field devices act) and the resulting data streams of every point link cause and effect very clearly. If an AI system changes a threshold in a function block, it will see the physical setup adapt in response, via the point data. Over many iterations of this, as David Silver puts it in Welcome to the Era of Experience, this allows AI systems to "inhabit streams of experience, rather than short snippets of interaction", which is the primary data modality AI systems like LLMs see during training and deployment. And this, mediated by reinforcement learning, should lead to models eventually surpassing human abilities in the applicable scientific domains.

First Steps in Practice

Putting all the pieces together, what would the first AI systems with these capabilities look like? How would human scientists collaborate with them? And where are they most likely to be deployed first?

As I've written repeatedly, some scientific domains are going to be able to take advantage of such systems earlier than others. Where the time required to set up and run a new experiment requires little human intervention that cannot be mediated by an industrial control system, these systems can save labs the most time. Furthermore, scientific domains where making a priori predictions about experiment outcomes is unfeasible, or more generally, experiment-bottlenecked scientific domains, are also more likely to be first adopters. This is because labs in these areas are forced to increase experimentation throughput to be more productive. This means they are incentivised to parallelise their experimental setups, which exaggerates the comparative advantage that such AI systems have over human scientists: that they, too, can be cloned and run in parallel.

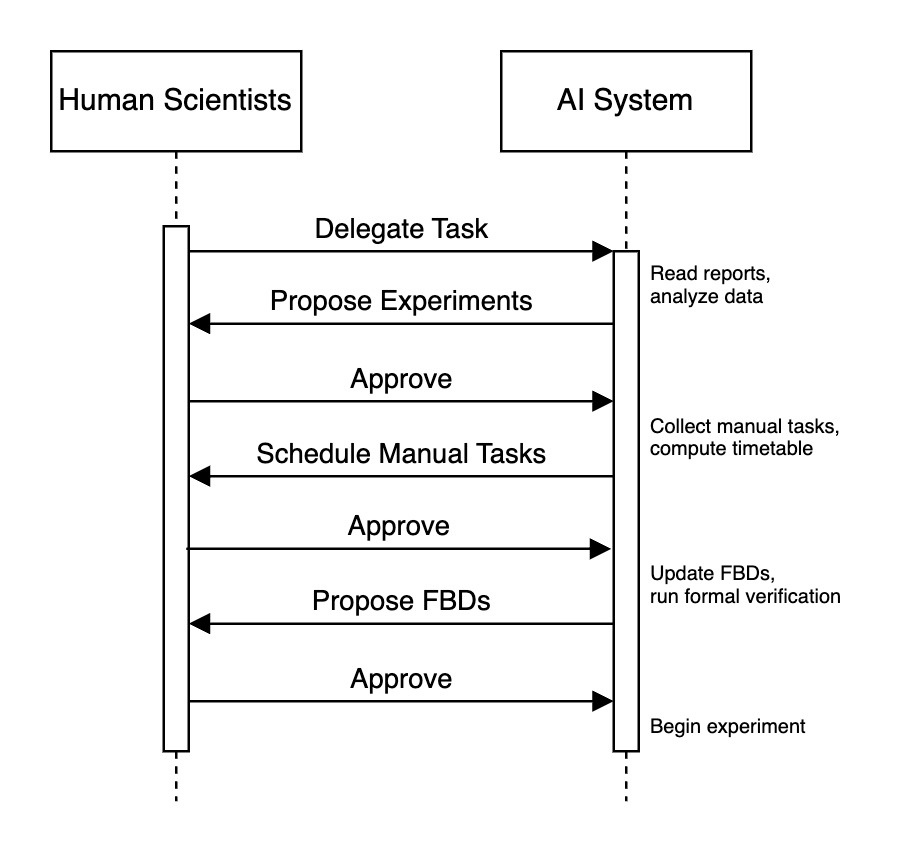

Most probably, these systems will initially be used to automate repetitive or low-value pockets of experimentation. As a concrete example, an R&D lab building chemical reactors may have a number of test stations set up, some of which they could delegate to the AI system. Working with it could proceed as follows.

Human scientists conceive of a set of operating parameters that may increase reactor performance (this is step 1 of the aforementioned "loop"). They upload the relevant reports into their AI system, and the foundation model reads a few more reports from its knowledge base and proposes a set of experiments where each of the operating parameters are slightly tweaked (the LLMs we have today are good enough to make this work). The human scientists approve, the AI system prints out a timetable of tasks requiring manual intervention (such as assembling a reactor) and then gets to work modifying the FBDs for each of the test stations (this is step 2). A formal verification pass is made, ensuring that no safety thresholds will be breached. The FBDs are deployed to the industrial control system and the experiment gets underway, with human supervisors being notified when unexpected experimental states arise (this is step 3). When the experiment completes, the AI system explores the data via the historian, generates reports and notifies the human scientists (this is step 4).

Because of the horizontal scalability of industrial control systems from labs to deployment sites like chemical plants, this same AI system could continue to ingest data once these reactors are deployed to customers. All that is required, technically, is exporting the customer's historian and feeding it into the AI system, in exactly the same way this process is done in the lab.

If the generated data is utilised correctly for training and reinforcement learning and if we get further progress in foundation models, we can expect steps 1 and 4 to also gradually move into the AI system's scope. Although FutureHouse's Robin, for instance, can already generate novel ideas, I expect this to require some fundamental advances beyond the current state of LLMs, especially in environments where the science is ahead of the published research. For example, frontier corporate R&D labs may have a few years of unpublished scientific advances spread across their internal reports, which are not going to be represented in LLM datasets. In such environments, the only LLMs that I have seen generate new ideas with passable "research taste" have been custom models where I implemented in-context learning techniques, such as from DeepMind's Titans paper, on top of open-weight LLMs and persisted the resulting learnings across sessions. But such continuously-learning models will eventually arrive at scale, and like I previously wrote, I believe that their ability in scientific domains will result from reinforcement learning on the data generated by these workflows.

Next Steps

I laid out a practical roadmap for using the AI models we have today as part of systems that can automate some R&D in certain domains of the natural sciences. Moving forward, how do we expand these systems to more domains?

Unfortunately, only some kinds of experimental setups are workable by industrial control systems. Setups in physics and chemistry are often well-supported. For any field device you require to instrument your experiment, you'll find a compatible industrial control system. Similarly, any procedure that is currently available to be run in a cloud lab, such as the standard life science methodologies I mentioned previously, can theoretically be controlled via FBDs and PLCs, assuming that APIs for the various measurement instruments are written. But biomedical research involving human participants or examining organisms using microscopy seems much more difficult to address using these systems.

It's worth pointing out that the technical and operational requirements do not constrain us to industrial control systems, per se. For instance, our safety constraints are met by any description of physical experimental setups where the grammar is formally verifiable. I personally think that the visual representation of control logic that FBDs offer makes it easy for human scientists to collaborate with AI systems. But if microscopy, for example, doesn't lend itself to such workflows, or does not require the safety constraints that formal verification gives us, other interfaces may suffice. Flexibility on these requirements will allow AI systems to expand their scope to more domains. But being able to programmatically interface with the experimental setup is a core prerequisite, and scientific domains where this is not possible are unfortunately unlikely to see AI systems meaningfully contribute to steps 2 and 3 of the "research loop" until we solve general robotics.

Realism on this point is important. Part of the motivation for this blog post came from reading somewhat unrealistic writing about AI-system-driven R&D acceleration that ignores the practical hurdles we'll face actually rolling these systems out. Over the long-term, as I wrote in the introduction, I'm very optimistic on the potential. But the road to developing this potential starts with limited AI systems in limited domains, as described in this post, which incrementally acquire scope as practical hurdles are resolved.

In this same practical vein: how do we tie all of the separate bits and pieces, from PLC runtimes to knowledge bases, into a platform that allows human and AI scientists to progress on research together? How would one actually build the training environments and systems I've described? This is what I'm currently spending my free time thinking about. If this interests you, email me!