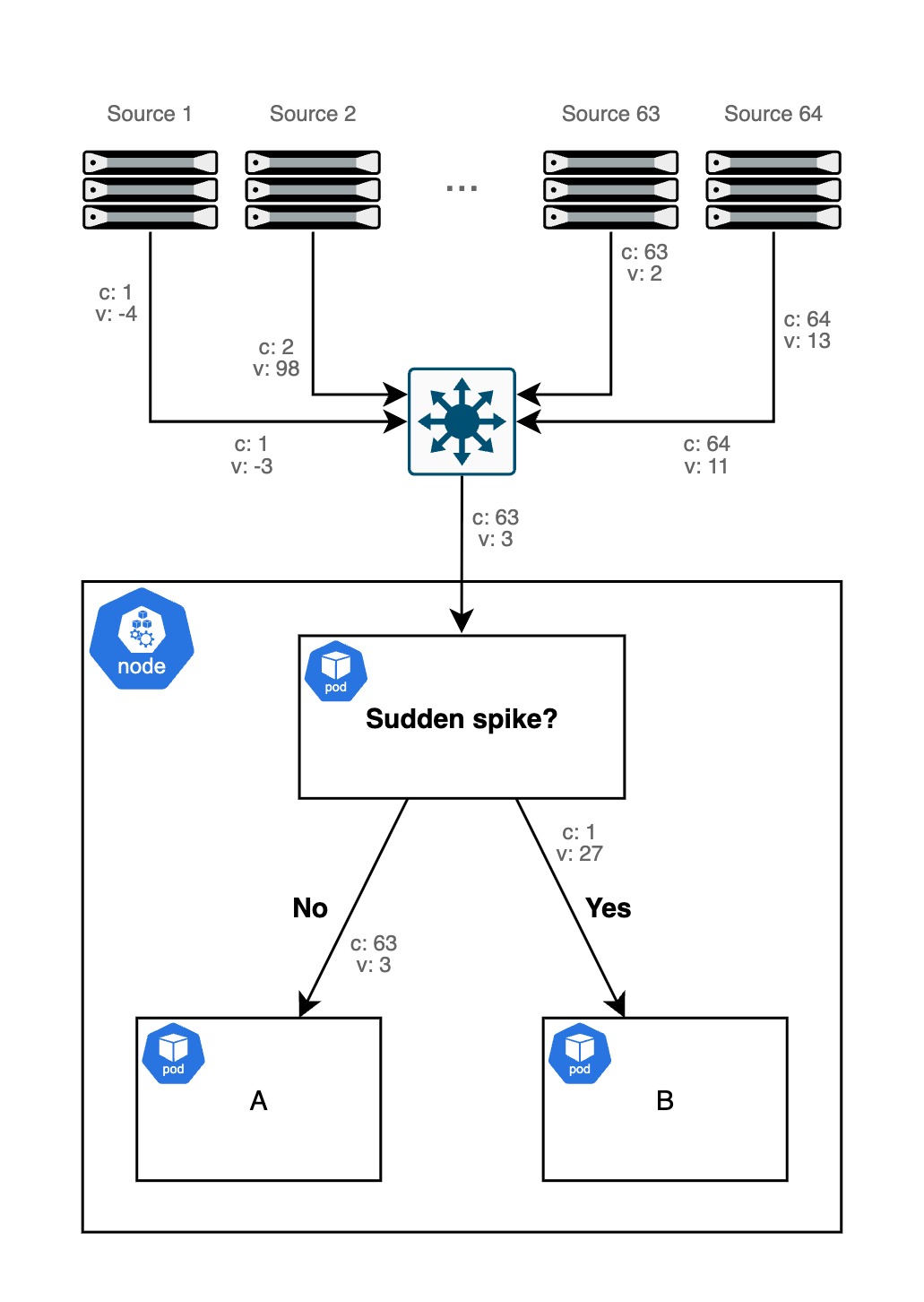

Recently, I was working on a Kubernetes workload that ingests time-series data at a high frequency, analyzes it and accordingly routes packets to downstream pods. In particular, 64 streams (or "channels") of data, where each data point is a decimal value, are produced by 64 sources and sent to the workload as individual UDP packets. When the workload detects a sudden spike in the EWMA (exponentially-weighted moving average) of the decimal values in a channel, it redirects the packets to pod B, whereas they would usually be routed to pod A.

The workload's code is relatively straightforward C++, but the sustainable throughput at the system level was too low at ~500k pps for this use-case. perf showed that most cycles were spent in the ksoftirqd/NAPI path rather than in user space itself due to needing to first get the packet into and out of the workload and then into the downstream pods. Circumventing this overhead means either (1) moving the processing logic out of the workload and into the kernel (with eBPF as the only sane option), (2) skipping the kernel altogether (DPDK, for example) or (3) routing packets into and out of user space much faster (AF_XDP, for example). Because setting up DPDK or AF_XDP is a bunch of work and requires binding the NIC queues exclusively, I chose to implement the logic in eBPF.

eBPF Program

The core logic extracts the channel and value from each incoming packet, inserts the value into a ring buffer (one per channel), recomputes the channel's EWMA and redirects the packet if the difference between the new and old EWMA exceeds a threshold. To do this, we need to preserve a channel's BUF_DEPTH (in my case, 10) previous values using one of eBPF's map data structures:

/* Struct for a channel, its recent samples and the EWMA derived from them */

struct chan_stats {

struct bpf_spin_lock lock; /* Avoid cores racing on channels */

struct sample samples_buf[BUF_DEPTH]; /* Ring buffer for recent samples */

__u8 next; /* Next index to overwrite */

__u8 count; /* Up to BUF_DEPTH */

__u64 ewma; /* Current EWMA << EWMA_SHIFT */

};

/* Map for channels and their stats, one slot per channel 0..63 */

struct {

__uint(type, BPF_MAP_TYPE_ARRAY);

__uint(max_entries, 64);

__type(key, __u32);

__type(value, struct chan_stats);

} chan_stats_map SEC(".maps");We need a spinlock to avoid two cores receiving packets for the same channel at the same time and racing to update the EWMA. We could avoid races by using the per-CPU map type BPF_MAP_TYPE_PERCPU_ARRAY, but this would mean that each core has its own, globally-incorrect slice of the channel's state. And since floating-point arithmetic is not supported in eBPF, we need to use fixed-point arithmetic, so we store the EWMA in fixed-point by shifting it left by EWMA_SHIFT bits (i.e. multiplying by 2^8) so we can represent fractional averages using only integer math.

In the function body, once we've parsed a packet and extracted the struct chan_stats for our channel's stats (cs), timestamp (ts) and value (val), we acquire the lock on cs and update the ring buffer and EWMA:

bool should_redirect = false;

/* Acquire lock to avoid other cores racing on the same channel */

bpf_spin_lock(&cs->lock);

ring_insert(cs, ts, val);

/* If we haven't filled the buffer yet, skip analysis */

if (cs->count == BUF_DEPTH) {

__u64 old_ewma = cs->ewma;

update_ewma(cs, val);

__u64 delta = cs->ewma > old_ewma

? cs->ewma - old_ewma

: old_ewma - cs->ewma;

/*

* old_ewma is in fixed-point (<< EWMA_SHIFT). Shifting by THRESH_SHIFT

* gives old_ewma / 2^THRESH_SHIFT, thus redirect when the EWMA spikes

* more than that.

*/

if (delta > (old_ewma >> THRESH_SHIFT))

should_redirect = true;

}

bpf_spin_unlock(&cs->lock);For now, the eBPF verifier prevents deadlocks from arising at runtime by ensuring that no eBPF helpers can be called under lock. This is quite restrictive and forces us to use the awkward should_redirect variable instead of calling the redirect helpers directly. But as of recently, this is being addressed by Kumar Kartikeya Dwivedi, who is working on a "resilient queued spinlock" that will dynamically check for deadlocks at runtime and guarantee forward progress. More on this here, very much looking forward to reading the implementation!

If we decide to redirect, we rewrite the L2 header to point at the alternate destination (pod B) and call the redirect helper:

/*

* Since bpf_redirect_peer doesn't rewrite the eth header with the correct

* MAC addresses, we need to do it ourselves. Even though rewriting the

* source MAC is not strictly necessary, if we don't do it the bridge

* incorrectly learns the source device's MAC on the pod port.

*/

bpf_skb_store_bytes(

skb,

offsetof(struct ethhdr, h_source),

host_veth_mac,

6,

0

);

bpf_skb_store_bytes(

skb,

offsetof(struct ethhdr, h_dest),

container_veth_mac,

6,

0

);

return bpf_redirect_peer(host_veth_ifindex, 0);(Usually, we should also rewrite the L3 headers to ensure that pod B's network stack doesn't drop the packet due to the now-incorrect destination IP address. But pod B's process uses a packet socket, so this is irrelevant.)

The bpf_redirect_peer helper is a performance optimization over the original bpf_redirect helper, which forces the packet through the CPU backlog queue and thus incurs overhead. bpf_redirect_peer bypasses the normal transmit qdisc and softirq path by directly enqueueing the packet onto the peer veth interface’s ingress. Since veth pairs connect hosts and pods in container networking, the helper is used by Cilium, for instance, and is appropriate for this program. More on the different redirect helpers here, from Arthur Chiao.

The source, including Makefile, can be found here. If you want to play around, note that you'll need a relatively recent kernel (> 6.6) to take advantage of the fast tcx_ingress attach type, although it should fall back to the clsact qdisc ingress hook on older kernels automatically.

Benchmarking

I used the kernel’s pktgen module to send our UDP packets at increasing rates and configured RSS so they spread across all RX queues. I pinned both the packet generator and the processing workload to dedicated cores to avoid noise due to scheduling. I first benchmarked the original C++ user space workload and wrote down the highest pps it could handle before packet drops, then repeated the same test with the eBPF program. I used perf stat with -e software:context-switches and -e softirq:* to split CPU time between user and softirq contexts.

| Implementation | Sustainable PPS | CPU Usage (cores) |

|----------------|------------------|-----------------------------------|

| C++ user space | ~500,000 | 1.0 core (user 50% / softirq 45%) |

| eBPF | ~1,200,000 | 0.8 core (user 10% / softirq 80%) |

CPU: AMD EPYC 7232P (8C/16T, 3.1 GHz base)

NIC: Intel X710-DA2 (10 GbE)

Kernel: v6.8

Clang/LLVM: v19.1.0There are still plenty of low-hanging fruit for further optimization. For a quick win at the start (although I didn't actually benchmark this), I forced the compiler to use v4 eBPF byte-code with -mcpu=v4, since versions 3 and above use 32-bit ALU ops and we're working with a bunch of 32-bit types. (Note that the compiler defaults to v1!) Of course, writing an XDP program would also avoid skb allocation overhead, but this means we can't use the bpf_redirect_peer helper (or the bpf_clone_redirect helper, should we later need to first clone and then redirect the packet). We could also use the BPF_MAP_TYPE_PERCPU_ARRAY map type and periodically merge the channels, but (1) the source devices send packets at a regular cadence, so I suspect that cores won't be waiting for locks much at all and (2) this is much more complex and our implementation is fast enough.

Kernel Access

It still blows my mind how easy eBPF makes moving code into the kernel. I first experimented with kernel development in 2016, when I attempted to write a reliable driver for the Akai MPK Mini mkII MIDI controller. I failed because the building, testing and debugging process was completely inscrutable to me, having only started programming a few years prior. Of course, driver development is not at all the intended use case for eBPF, but the concept alone of being able to write a program and having it verified and run in kernel space a few commands later (with a great ecosystem of tools around it) is crazy to me.

And using these tools, implementing this relatively niche routing logic in eBPF took a mere 200 lines of code. Whether moving business logic into the kernel is a good idea at scale is a different matter entirely, but for this kind of light networking logic, it's at least useful to have the option.